X.4 Core Image¶

960 words on X.4 Overview

Core Image is a powerful new graphics engine that found its way into Mac OS X.4. Providing powerful compositing, being extendable by filters an utilising all the processing power it can find in your computer, it’s quite a cool beast. In my previous notes on it, I said I may be overly optimistic here, but I like it.

And that’s pretty much the state I remain in until today.

While the whole Core Image technology may be cool and modern, I haven’t even seen it at its full beauty outside of Apple’s demonstrations. Just because my old Powerbook doesn’t have a graphics chip that’s even close to being able to support Core Image. Apple claim that Core Image is smart enough to scale down to older machines, using the processors it finds there to achieve the same effects at a slower speed.

Apple’s foremost graphics demo application, Quartz Composer (which comes with the Developer Tools), that all the cool demos seem to be made with won’t even launch on my computer for its lack of new graphics chip. I think that’s mostly because of the lack of 3D power, though, than because of the Core Image aspects I mentioned above. Still, I’d like to ironically note that the files saved by Quartz Composer can still be displayed by QuickTime. At glacial speeds and incompletely, I might add, so QuickTime should better not even try to do this on older computers.

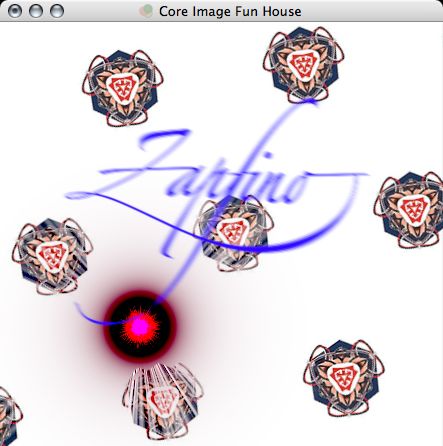

Core Image Fun House (also part of the Developer Tools), however, seems to run just fine on older computers as well. Aside from a horrendous tendency to crash frequently and it’s inability to have anything but a straight stack of layers, it should be sufficient to give you an idea of Core Image’s abilities. You can generate backgrounds and insert images. Those can be distorted or filtered. And there can even be animated transitions.

Core Image Fun House (also part of the Developer Tools), however, seems to run just fine on older computers as well. Aside from a horrendous tendency to crash frequently and it’s inability to have anything but a straight stack of layers, it should be sufficient to give you an idea of Core Image’s abilities. You can generate backgrounds and insert images. Those can be distorted or filtered. And there can even be animated transitions.

With slower computers, I recommend not using the example images supplied by Apple. They’re large enough to make your computer cry – or at least be painfully slow. Rather use a small image. Starting with a screenshot of Apple’s Core Image advertisement page and a few letters, I made the image below. To me it hints that Core Image gives you a fairly powerful set of tools. And one that can be used to create rather ugly things with very little effort.

Doing this kind of playing around (rather than any actual measurements), Core Image’s speed leaves a mixed impression. Standard filters on small images seem to run reasonably quickly. But once you have larger images, like an 800x800 image, even things like blurring start to feel slow and changing the brightness seems to happen at at most half the speed of GraphicConverter. This may be due to the whole image being updated at once after all the computations have been done rather than updating the display block-by-block like Photoshop does, but at the end of the day it makes my computer feel a few years older than it is.

So, for the time being, I don’t think I’ll get into Core Image too much. The only place where I can see myself using it actively is in the ever wonderful GraphicConverter which started having support for Core Image graphics filters soon after the release of X.4. GraphicCoverter’s UI for the filters isn’t exactly great or responsive yet but I’m sure that Thorsten Lemke will manage to improve that soon.

While Thorsten certainly is one of the more active and experienced Mac programmers around, GraphicCoverter has always been relatively slow to pick up new features offered by the OS. I assume that there are many reasons for that, ranging from the fact that GraphicConverter has been around for ages and still works on Mac OS 8 in its current version to the fact that Thorsten knows how to keep himself busy with gazillions of other improvements that are documented in GraphicConverter’s version history. As he was able to enhance GraphicConverter with Core Image fairly quickly (and with some Automator actions and Spotlight finding as well) suggests that both Thorsten and Apple are good at their respective jobs of programming and making the System’s new features easily available (even to ‘old-fashioned’ applications).

Initially I was tempted to go ahead and make some Core Image filters of my own. But as it turned out that I don’t really use Core Image all that much and the documentation suggests that it’d be helpful to use Quartz Composer when starting to progam the filter, there’s not too much point in doing this for me at this stage, so I can’t say a lot about that. It doesn’t look like there have been floods of cool filters coming along already, so people may be taking their time.

Apart from those basic problems, it also seemed like the capabilities of those filters are quite limited – even loops seem to be a problem. A shame… after seeing how slooow Java is on my computer with a little applet that one of the guys in our department wrote for drawing Mandelbrot and Julia sets (which lets you draw a route through the Mandelbrot set and it’ll ride along that route drawing an animation of the Julia sets at each point of the route), I had this little urge to come up with a clever and fast way to view those on my computer. A way that doesn’t involve using X11 to run the web browser on one of the Linux machines…

In short – no, I don’t have any real use for Core Image, no, I haven’t seen any compelling applications yet that make it look like a must-have. But I still hope that we will get them in the future.