Unicode Normalisation¶

828 words on Software

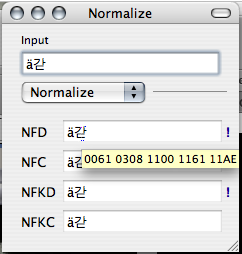

Sometime back in 2002 Steffen implemented support for the various Unicode normalisation forms in UnicodeChecker. This meant that UnicodeChecker got both a utility to tell you which normalisation forms a string conforms to and a service to convert to a specific normalisation form. All fancy and exciting from a Unicode point of view but all I could ask at the time was and what the heck is that for?

Of course I quickly received an answer for that: For some characters there exist a number of different ways to represent them in Unicode. For example the simple ä can be encoded in a composed way, i.e. as a straightforward ä (U+E4) or in a decomposed way as the more complicated combination of the letter a (U+61) and the combining umlaut accent ̈ (U+308). While the composed accented characters are more explicit, their decomposed brethren are more flexible. For example it’s no problem to have doubly decorated characters like this

à̧ (U+61 U+327 U+300 for example) with them. Similar applications seem to exist for Korean syllables which make up other characters.

Of course I quickly received an answer for that: For some characters there exist a number of different ways to represent them in Unicode. For example the simple ä can be encoded in a composed way, i.e. as a straightforward ä (U+E4) or in a decomposed way as the more complicated combination of the letter a (U+61) and the combining umlaut accent ̈ (U+308). While the composed accented characters are more explicit, their decomposed brethren are more flexible. For example it’s no problem to have doubly decorated characters like this

à̧ (U+61 U+327 U+300 for example) with them. Similar applications seem to exist for Korean syllables which make up other characters.

Once you start thinking about having to sort or find strings (and unlike many ‘programmers’ I mean real strings with that not just an array of bytes…), it’s pretty clear that you need to have some kind of normalisation to get useful results. And that’s what all the normalisation business is about.

In more detail, you have the canonical

normalisations in composed (C) and decomposed (D) forms as described in the examples above for combined characters. And in addition you have the compatibility

normalisations in composed (KC) and decomposed (KD) forms which go even further and in a way dumb the text down for better searchability. For example the ligature fi will be replaced by two letters fi when normalised with compatibility in mind and similar things will happen for other code points to aid finding. As normalising to one of the ‘K’ forms can really break the text you hand it, I suppose that text normalised in that way is not supposed to be passed back to the user but will find more uses in softwares’ internal magic.

One thing I recently discovered is that occasionally software will render equivalent text differently depending on the normalisation that is used. I assume that this happens because the software will try to use a pre-made accented character first if that exists in the font when being told to render text in a C-normalisation, while when trying to render equivalent text in D-normalisation it will assemble things from the glyph for the base character and the glyph for the accent. And when certain rendering mechanisms an certain fonts come together, things can end up looking broken when the decomposed form is used.

As those phenomena seem to depend on both the software and the fonts used, they can be a bit tricky to reproduce. Examples where I saw problems were Safari not rendering č properly in its decomposed form using Arial, or Firefox on the Mac fails to render pretty much any decomposed accented character properly – displaying a box next to the letter rather than an accent where it should be (on Windows Firefox seems to do a better job and renders the accent correctly but too far away from the letter it belongs to.) A short example could be this:

| Decomposed | Composed | |

| Arial | č | č |

| Standard font | à | à |

I guess this isn’t a terribly big problem, but it’s a hint that if you want to play things safe, you want to use the composed normalisation for things that are published on the web, as I haven’t seen any of those quirks yet when displaying text in the composed form.

The suble thing here is that as long as you’re only typing (on the Mac at least) you seem to end up with text in C-normalisation anyway. But as soon as you start interacting with other applications, there can be unexpected surprises. For example, copying file names in the Finder (and I assume many other applications) will give you text in the decomposed form because file names in HFS+ are normalised to decomposed form before they are stored (unlike in UDF or NTFS, say) and PDF files seem to prefer decomposed characters as well, so a little bit of copying and pasting can get you into situations you didn’t want to be in.

As Korean seems to be affected by the different decompositions a lot (as far as I understand, Korean characters are made up from a rather small number of syllable characters and can be written in both composed and decomposed forms in Unicode), I wonder whether this aspect may be more known among people knowing Korean. As far as I can tell, Firefox won’t render Korean properly when it’s stored in a decomposed Unicode form for example, which I’d consider to be a significant problem. I’d be interested to learn more about that.